When configuring Low Latency Queuing (LLQ), we can configure a minimum bandwidth or minimum remaining bandwidth. In this lesson, I’ll explain the difference between the two and we’ll verify this on a network.

Bandwidth

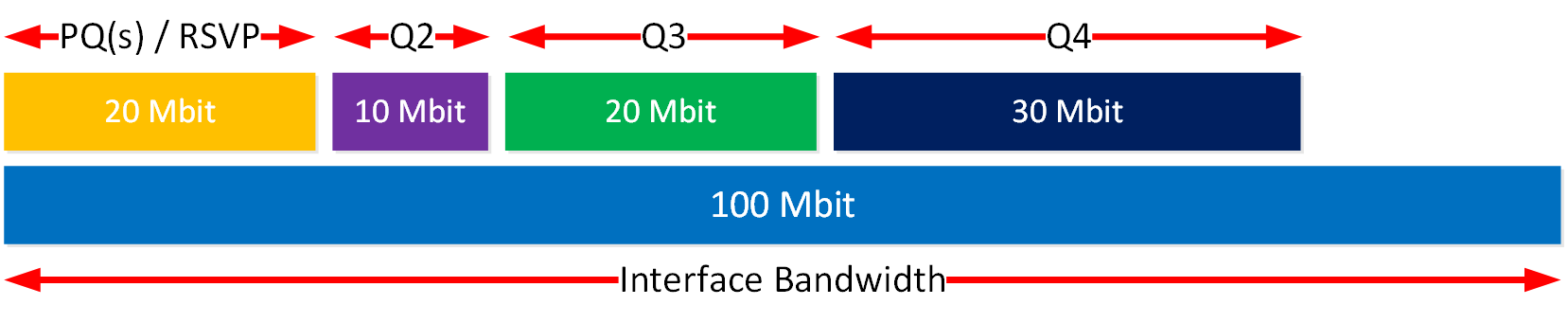

When you configure bandwidth, you specify a percentage of the total interface bandwidth. For example, let’s say we have a 100 Mbit interface and four queues:

- Priority queue:

-

- Q1: 20%

-

- CBWFQ queues:

- Q2: 10%

- Q3: 20%

- Q4: 30%

Your CBWFQ queues will have a percentage of the total interface bandwidth so the queues will have these minimum bandwidth guarantees:

- Q2: 10% of 100 Mbit = 10 Mbit

- Q3: 20% of 100 Mbit = 20 Mbit

- Q4: 30% of 100 Mbit = 30 Mbit

Here’s a visualization:

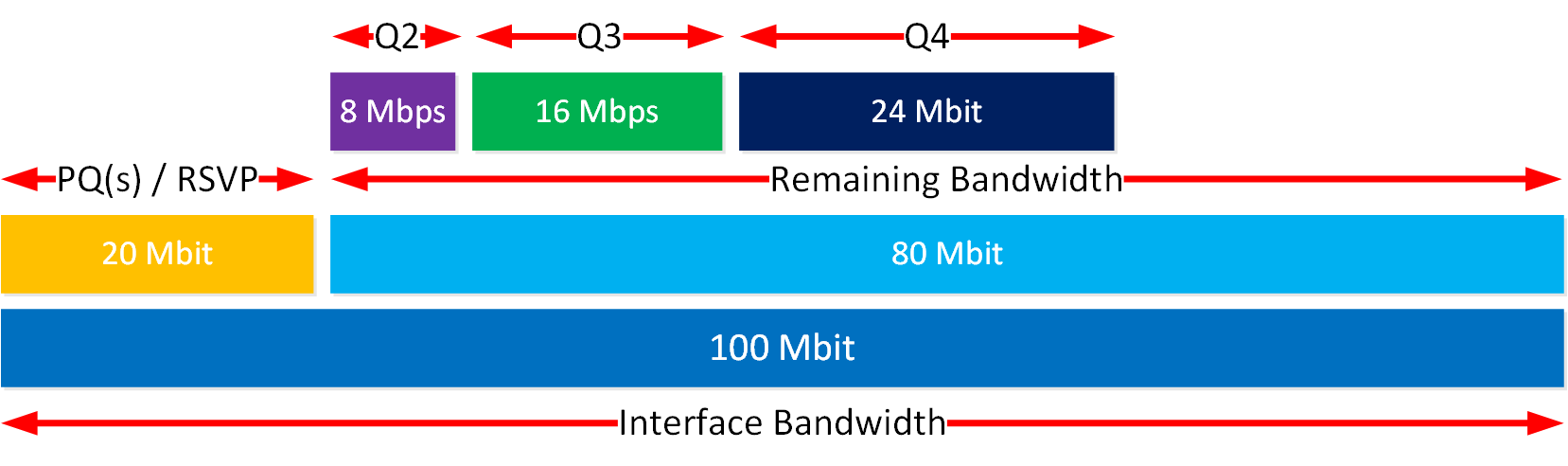

Bandwidth Remaining

Bandwidth remaining is a relative percentage of the remaining bandwidth. What that means, is the bandwidth available after the priority queue (and RSVP reservations).

Let’s use the same example of a 100 Mbit interface and four queues:

- Priority queue:

- Q1 20%

- CBWFQ queues:

- Q2: 10%

- Q3: 20%

- Q4: 30%

The priority queue gets 20 Mbps. That means the available bandwidth is 100 Mbps – 20 Mbps = 80 Mbps. The other queues get a minimum bandwidth of:

- AF11: 10% of 80 Mbps = 8 Mbps

- AF21: 20% of 80 Mbps = 16 Mbps

- AF31: 30% of 80 Mbps = 24 Mbps

Here’s how to visualize this:

Now you know the difference between bandwidth and bandwidth remaining. Let’s look at it in action.

Configuration

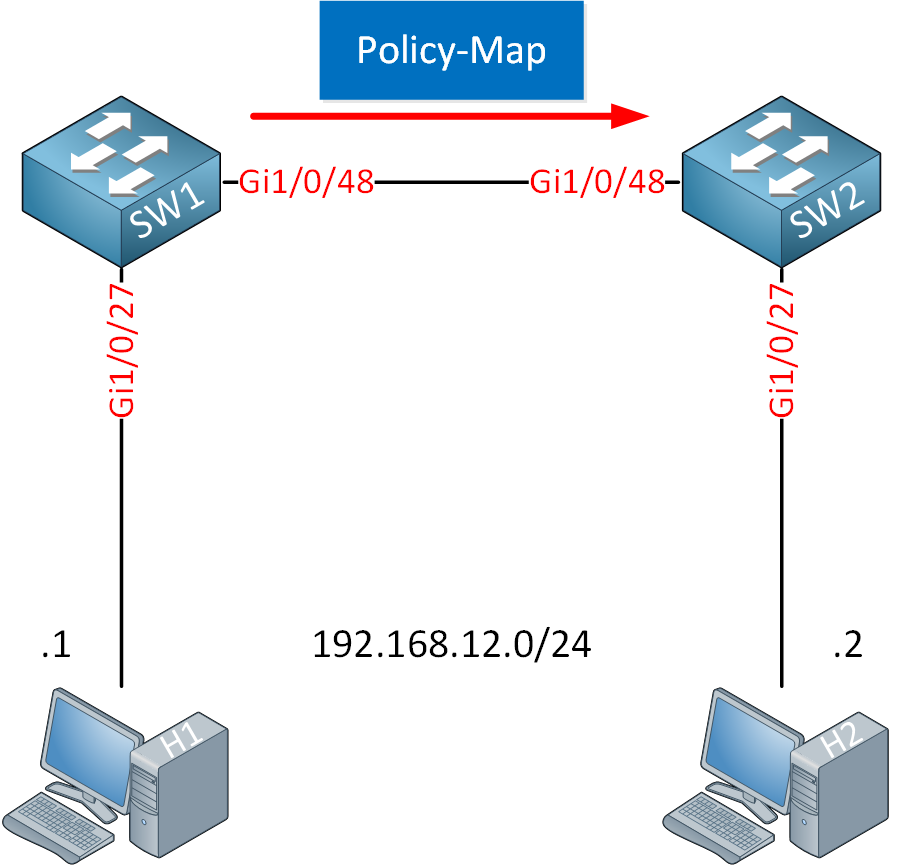

Below is the topology we’ll use:

We have two Linux hosts which I’ll use to generate traffic with iPerf:

- H1 is the iPerf client.

- H2 is the iPerf server.

I use WS-C3850-48T switches running Cisco IOS Software [Denali], Catalyst L3 Switch Software (CAT3K_CAA-UNIVERSALK9-M), Version 16.3.3, RELEASE SOFTWARE (fc3).

The hosts are connected with Gigabit Interfaces. I reduced the bandwidth of the Gi1/0/48 interfaces between SW1 and SW2 to 100 Mbps with the speed 100 command. This makes it easy to congest the connection between SW1 and SW2.

- Startup Configurations

- SW1

- SW2

Want to take a look for yourself? Here you will find the startup configuration of each device.

There are four simple class-maps. Each class-map matches a different DSCP value:

SW1#show running-config class-map

Building configuration...

Current configuration : 217 bytes

!

class-map match-any EF

match dscp ef

class-map match-any AF21

match dscp af21

class-map match-any AF31

match dscp af31

class-map match-any AF11

match dscp af11 Let’s configure H2 as the iPerf server:

$ iperf -s

------------------------------------------------------------

Server listening on TCP port 5001

TCP window size: 128 KByte (default)

------------------------------------------------------------

[ 4] local 192.168.12.2 port 5001 connected with 192.168.12.1 port 48824Bandwidth

Let’s start with the bandwidth command. I’ll start four traffic streams:

$

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600 -S 0xB8

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600 -S 0x28

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600 -S 0x48

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600 -S 0x68Let me explain what you see above:

- Each stream sends 50,000 kb/s of traffic.

- We show a periodic bandwidth report every second.

- Each traffic stream runs for 3600 seconds.

- We use different DSCP ToS hexadecimal values:

- B8: DSCP EF

- 28: DSCP AF11

- 48: DSCP AF21

- 68: DSCP AF31

Here is the policy-map that we’ll use:

SW1#show running-config policy-map BANDWIDTH

Building configuration...

Current configuration : 193 bytes

!

policy-map BANDWIDTH

class EF

priority level 1

police rate percent 20

class AF11

bandwidth percent 10

class AF21

bandwidth percent 20

class AF31

bandwidth percent 30

!

endLet me explain what we have:

- DSCP EF: priority queue, policed to 20% of the interface bandwidth.

- DSCP AF11: bandwidth guarantee of 10% of the interface bandwidth.

- DSCP AF21: bandwidth guarantee of 20% of the interface bandwidth.

- DSCP AF31: bandwidth guarantee of 30% of the interface bandwidth.

Let’s activate this policy-map on the interface that connects SW1 to SW2:

SW1(config)#interface GigabitEthernet 1/0/48

SW1(config-if)#service-policy output BANDWIDTHHere is the output of the policy-map:

SW1#show policy-map interface GigabitEthernet 1/0/48

GigabitEthernet1/0/48

Service-policy output: BANDWIDTH

queue stats for all priority classes:

Queueing

priority level 1

(total drops) 138138

(bytes output) 535149124

Class-map: EF (match-any)

341339 packets

Match: dscp ef (46)

0 packets, 0 bytes

5 minute rate 0 bps

Priority: Strict,

Priority Level: 1

police:

rate 20 %

rate 20000000 bps, burst 625000 bytes

conformed 438874960 bytes; actions:

transmit

exceeded 79292730 bytes; actions:

drop

conformed 8988000 bps, exceeded 1683000 bps

Class-map: AF11 (match-any)

193351 packets

Match: dscp af11 (10)

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

(total drops) 1103586

(bytes output) 305359154

bandwidth 10% (10000 kbps)

Class-map: AF21 (match-any)

263653 packets

Match: dscp af21 (18)

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

(total drops) 1630332

(bytes output) 419059354

bandwidth 20% (20000 kbps)

Class-map: AF31 (match-any)

334765 packets

Match: dscp af31 (26)

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

(total drops) 2023494

(bytes output) 533413204

bandwidth 30% (30000 kbps)

Class-map: class-default (match-any)

86 packets

Match: any

(total drops) 0

(bytes output) 512We can see that each class is receiving packets In iPerf, I see these traffic rates:

- EF: 19.9 Mbits/sec

- AF11: 15.7 Mbits/sec

- AF21: 25.2 Mbits/sec

- AF31: 33.6 Mbits/sec

The bandwidth percent command is an absolute percentage of the interface bandwidth (100 Mbps). Our DSCP EF traffic is policed at 20% (20 Mbps) so the interface has 80% (80 Mbps) remaining bandwidth. Here are our current bandwidth guarantees:

- AF11: 10% of 100 Mbps = 10 Mbps.

- AF21: 20% of 100 Mbps = 20 Mbps.

- AF31: 30% of 100 Mbps = 30 Mbps.

At this moment, we only have traffic marked with DSCP EF, AF11, AF21, and AF31. There are hardly any packets that end up in the class-default class. Our available bandwidth (80 Mbps) is higher than our bandwidth guarantees (10 + 20 + 30 = 70 Mbps) which is why you see these higher iPerf traffic rates.

If I generate some packets that don’t match any of my class-maps, they’ll end up in class-default and my non-priority queues will be limited to what I set with the bandwidth percent command.

I’ll start one more iPerf session without specifying a ToS byte:

$

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600This traffic hits our class-default class:

SW1# show policy-map interface GigabitEthernet 1/0/48 output class class-default

GigabitEthernet1/0/48

Service-policy output: BANDWIDTH

queue stats for all priority classes:

Queueing

priority level 1

(total drops) 138138

(bytes output) 3041649934

Class-map: class-default (match-any)

42663 packets

Match: any

(total drops) 218592

(bytes output) 68535416Here are the iPerf traffic rates that I see:

- EF: 19.9 Mbits/sec

- AF11: 15.7 Mbits/sec

- AF21: 23.1 Mbits/sec

- AF31: 31.5 Mbits/sec

- Class-default: 6.29 Mbits/sec

With the unmarked class-default traffic competing for our bandwidth, we see that the traffic rates for our AF11, AF21, and AF31 queues now look closer to our configured bandwidth guarantees.

Bandwidth Remaining

Now let’s try the bandwidth remaining command.

I’ll use the same four iPerf traffic streams to start with:

$

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600 -S 0xB8

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600 -S 0x28

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600 -S 0x48

iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600 -S 0x68Here is the policy-map:

SW1#show running policy-map BANDWIDTH-REMAINING

Building configuration...

Current configuration : 233 bytes

!

policy-map BANDWIDTH-REMAINING

class EF

priority level 1

police rate percent 20

class AF11

bandwidth remaining percent 10

class AF21

bandwidth remaining percent 20

class AF31

bandwidth remaining percent 30

!

endThe policy-map is exactly the same, except this time we used the bandwidth remaining command.

SW1(config)#interface GigabitEthernet 1/0/48

SW1(config-if)#no service-policy output BANDWIDTH

SW1(config-if)#service-policy output BANDWIDTH-REMAININGLet’s look at the policy-map in action: STAKE

SW1# show policy-map interface GigabitEthernet 1/0/48

GigabitEthernet1/0/48

Service-policy output: BANDWIDTH-REMAINING

queue stats for all priority classes:

Queueing

priority level 1

(total drops) 57684

(bytes output) 906260928

Class-map: EF (match-any)

587434 packets

Match: dscp ef (46)

0 packets, 0 bytes

5 minute rate 0 bps

Priority: Strict,

Priority Level: 1

police:

rate 20 %

rate 20000000 bps, burst 625000 bytes

conformed 739695594 bytes; actions:

transmit

exceeded 152047434 bytes; actions:

drop

conformed 12374000 bps, exceeded 2559000 bps

Class-map: AF11 (match-any)

317405 packets

Match: dscp af11 (10)

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

(total drops) 2018940

(bytes output) 489773592

bandwidth remaining 10%

Class-map: AF21 (match-any)

634930 packets

Match: dscp af21 (18)

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

(total drops) 3896706

(bytes output) 979542630

bandwidth remaining 20%

Class-map: AF31 (match-any)

961975 packets

Match: dscp af31 (26)

0 packets, 0 bytes

5 minute rate 0 bps

Queueing

(total drops) 5316036

(bytes output) 1484056002

bandwidth remaining 30%

Class-map: class-default (match-any)

66 packets

Match: any

(total drops) 0

(bytes output) 704In the output above, we see that each class receives packets. Here are the iPerf traffic rates that I see:

- EF: 18.9 Mbits/sec

- AF11: 12.6 Mbits/sec

- AF21: 25.2 Mbits/sec

- AF31: 36.6 Mbits/sec

The bandwidth remaining percent is a relative percentage of the remaining bandwidth. What that means is the bandwidth available after the priority queue (and RSVP reservations).

The priority queue gets 20 Mbps. That means the available bandwidth is 100 Mbps – 20 Mbps = 80 Mbps. The other queues get a minimum bandwidth of:

- AF11: 10% of 80 Mbps = 8 Mbps

- AF21: 20% of 80 Mbps = 16 Mbps

- AF31: 30% of 80 Mbps = 24 Mbps

The reason we see higher traffic rates is because, at this moment, we are only transmitting traffic marked as EF, AF11, AF21, and AF31. There is more available bandwidth than the minimum bandwidth guarantee. Let’s generate some unmarked traffic so that we get to see the minimum bandwidth guarantees in action:

$ iperf -c 192.168.12.2 -b 50000K -i 1 -t 3600This is what we see now in iPerf:

- EF: 18.9 Mbits/sec

- AF11: 8.39 Mbits/sec

- AF21: 14.7 Mbits/sec

- AF31: 22.0 Mbits/sec

- Class-default: 30.4 Mbits/sec

This output matches the minimum bandwidth guarantees which we configured.

Conclusion

You have now learned the difference between the bandwidth and bandwidth remaining commands:

- The

bandwidthcommand sets a minimum bandwidth based on the interface bandwidth. - The

bandwidth remainingcommand sets a minimum bandwidth based on the remaining bandwidth (after priority queues and RSVP reservations).

I hope you enjoyed this lesson. If you have any questions please leave a comment.